Liang He | 何亮

Assistant Professor of Computer Science

Director, Design & Engineering for Making (DE4M) Lab

Department of Computer Science, Erik Jonsson School of Engineering and Computer Science

University of Texas at Dallas

curriculum vitae liang.he@utdallas.edu ECSS 4.224

I am an Assistant Professor of Computer Science at the Department of Computer Science, Erik Jonsson School of Engineering & Computer Science, University of Texas at Dallas (UTD). At UTD, I lead the Design & Engineering for Making (DE4M) Lab. Prior to joining UTD, I worked at Purdue University as an Assistant Professor of Interactive Media from 2022 to 2025, and visited Keio University as a visiting Associate Professor in 2025 summer. I graduated from the University of Washington with a Ph.D. degree in Computer Science & Engineering, advised by Jon E. Froehlich. In the past, I also worked as research interns at HP Labs, Microsoft Research in Redmond, and Keio-NUS CUTE Center.

The primary focus of my research in Human–Computer Interaction (HCI) is digital fabrication, aiming to enable customizable and personalized experiences through new pipelines, techniques, and devices that integrate computational and physical intelligence. In my research, I emphasize prototyping and tinkering as processes to explore how people might interact with the physical world across diverse domains, including making, haptics, accessibility, and education. My work has been published in top HCI and UbiComp venues, such as ACM CHI, UIST, IMWUT, TEI, and ASSETS, and has received multiple awards.

Research Overview

(see the full list of publications on Google Scholar and the DE4M Lab website)

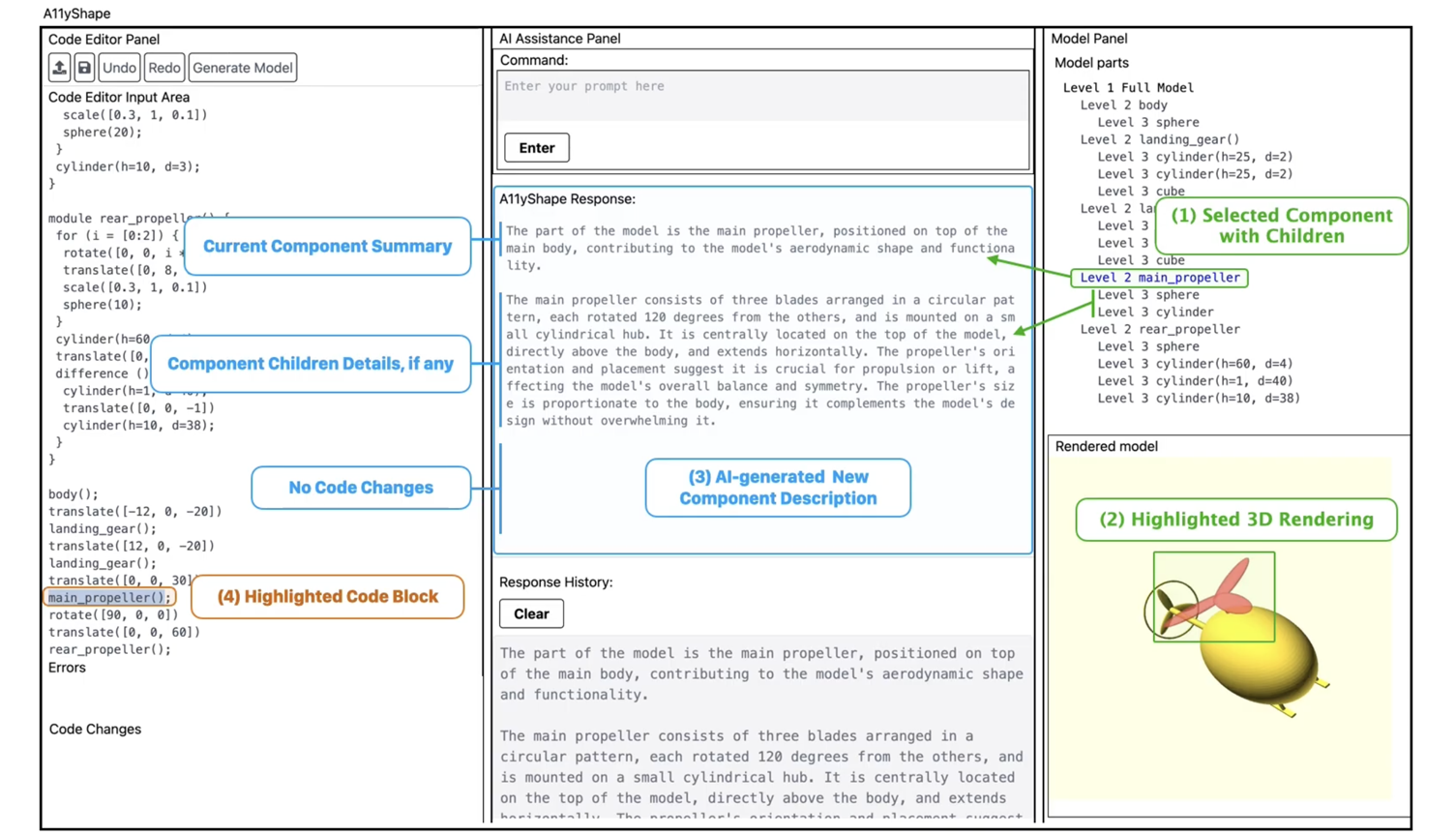

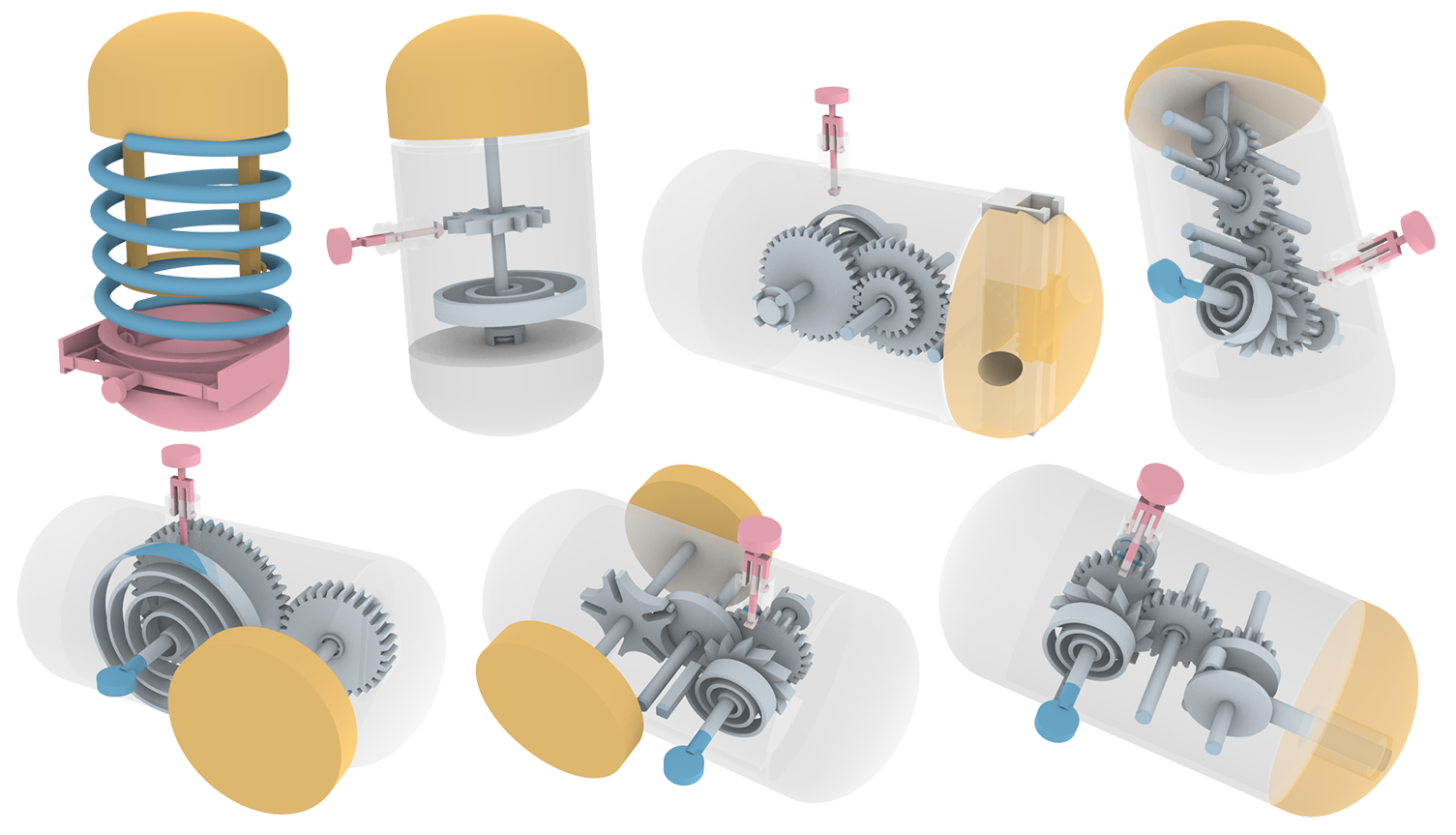

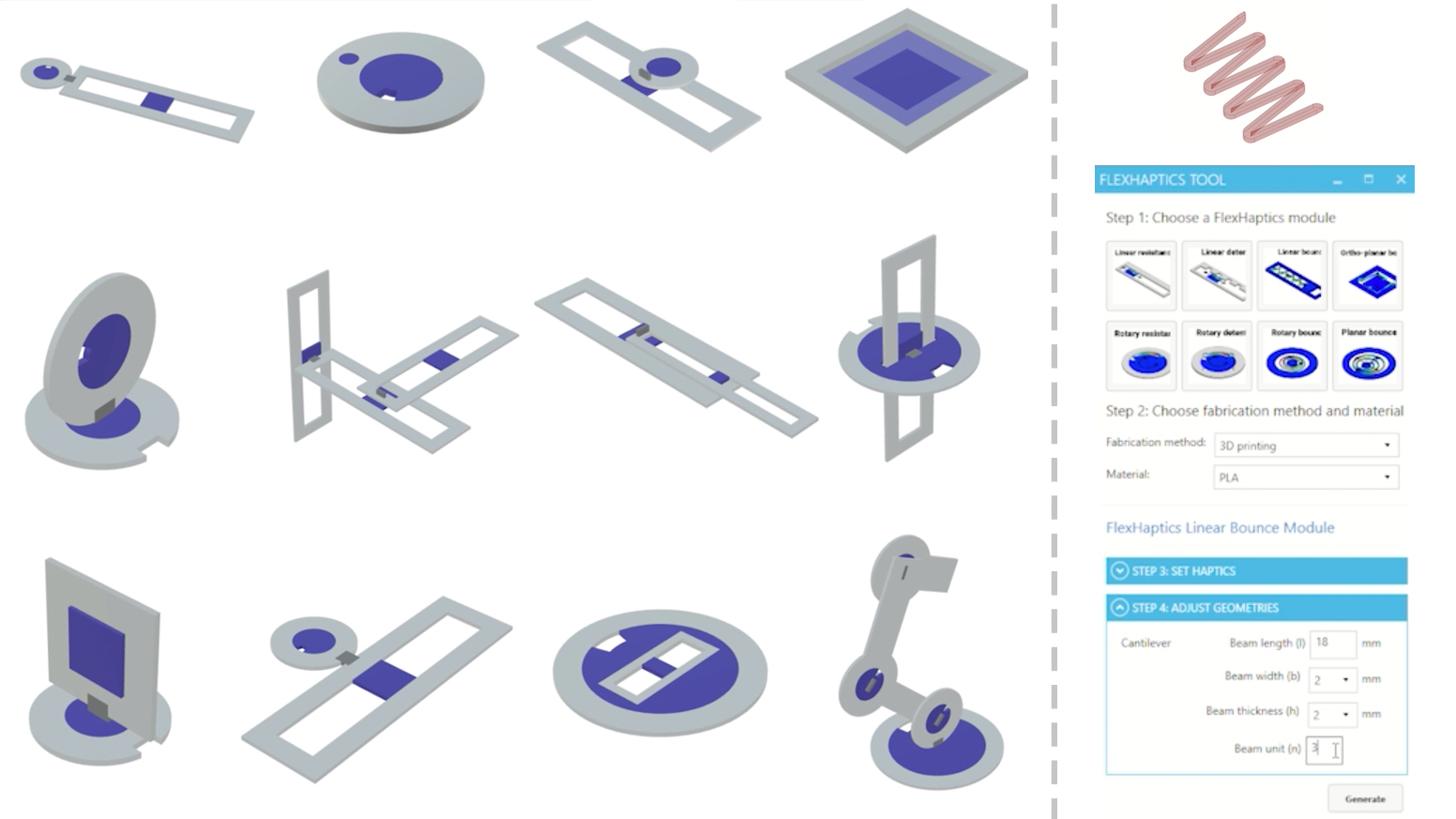

#1 Computational Design and AI-Assisted Tools for Creative Tasks

Creativity is a fundamental characteristic of human beings, enabling the construction and enrichment of individual experiences in the physical world. Yet, the joy of creation is often limited by the lack of supportive tools. My research group develops computational design and AI-assisted tools that extend users’ capabilities, facilitating tasks that were previously infeasible and thereby engineering their creative expression.

Example Publications

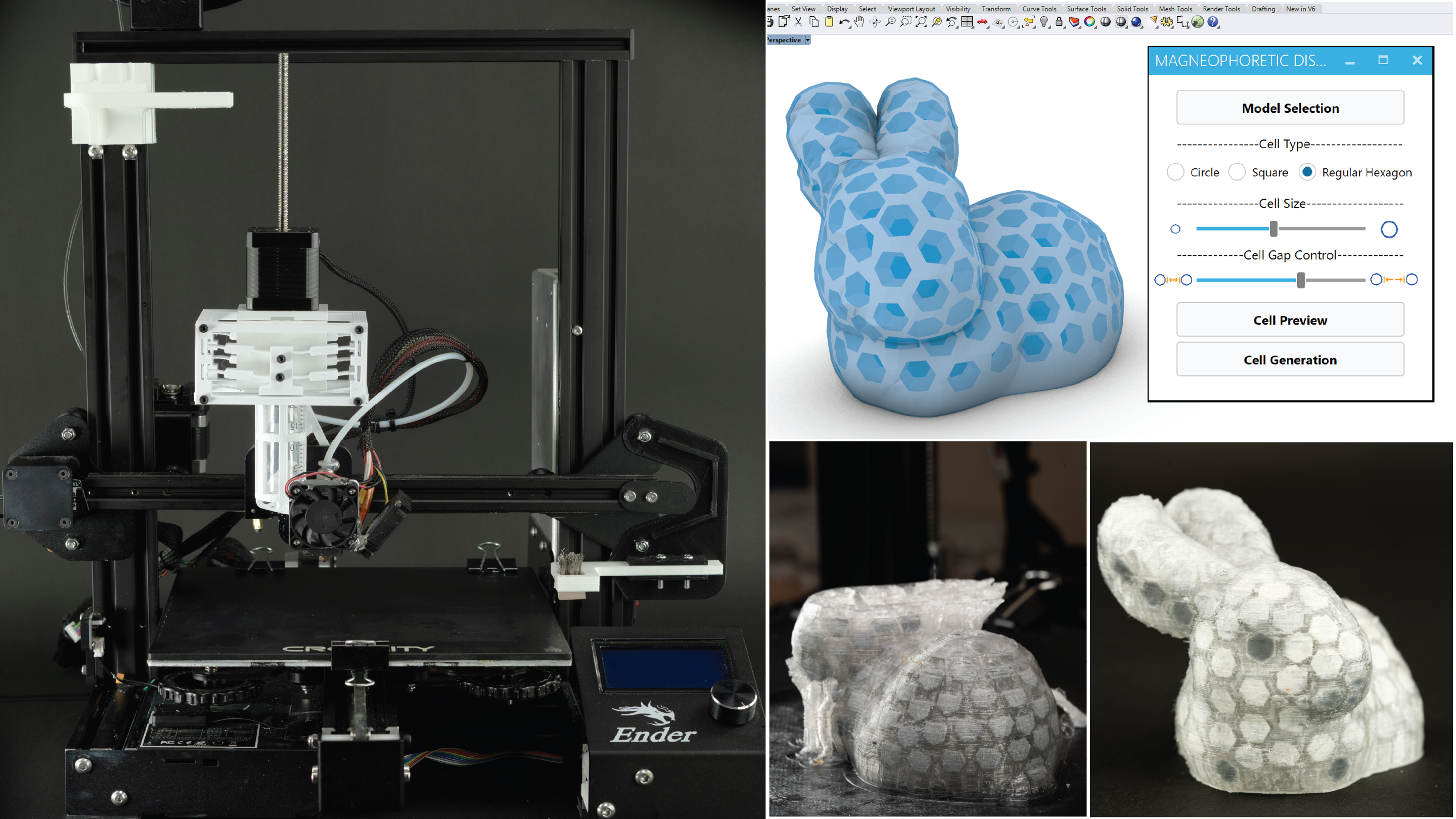

#2 Streamlined Making Pipelines for Personal Fabrication

While digital fabrication technologies enable people to create complex and personalized artifacts, their design and fabrication workflows often remain inaccessible to end users, particularly novices. My research investigates integrated and intelligent pipelines that streamline these processes and lower barriers to making interactive and customizable artifacts through emerging fabrication techniques and machines. Ultimately, this line of research seeks to democratize the means of making, enabling a diverse range of people to participate in the trendy topic.

Example Publications

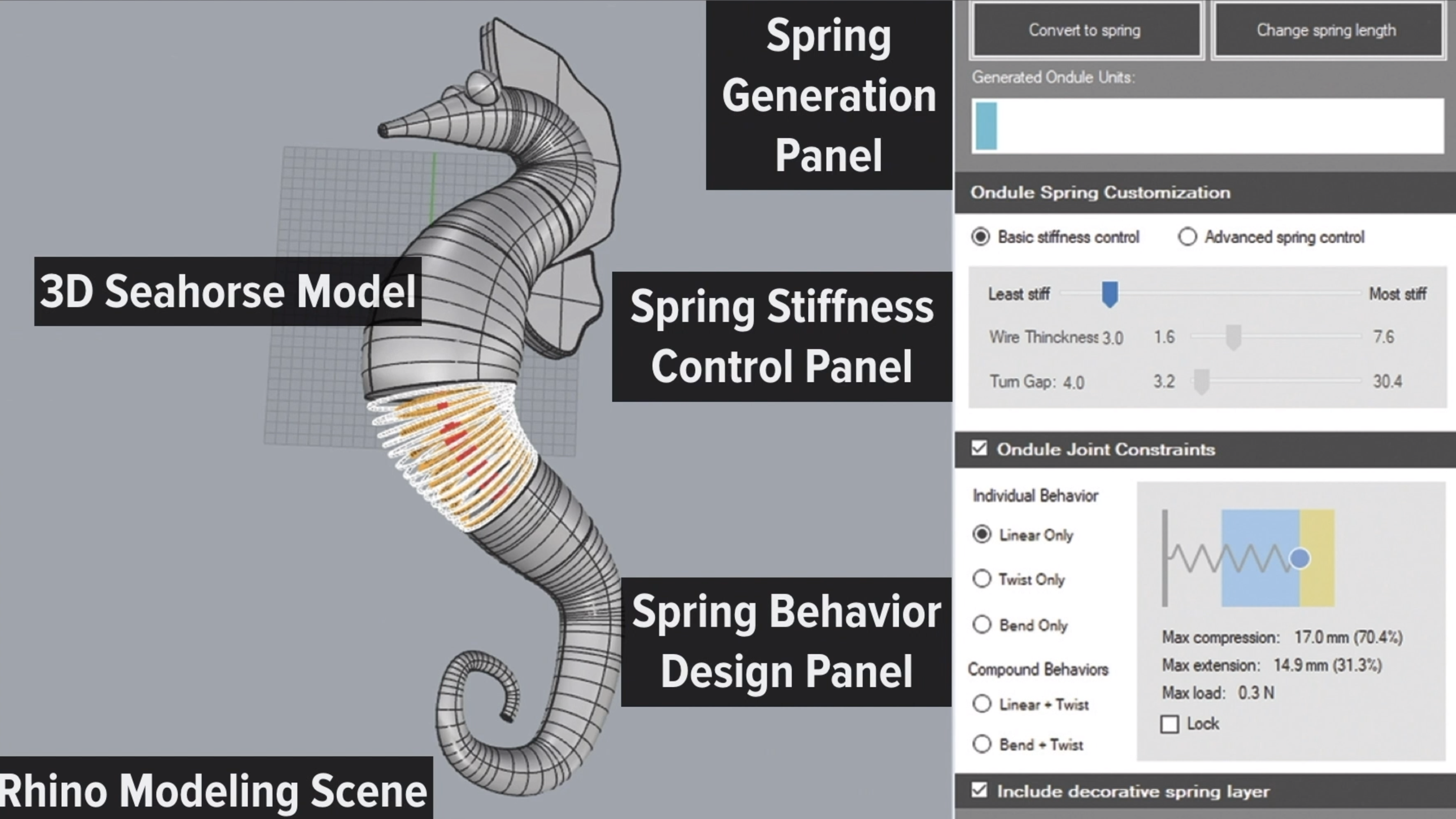

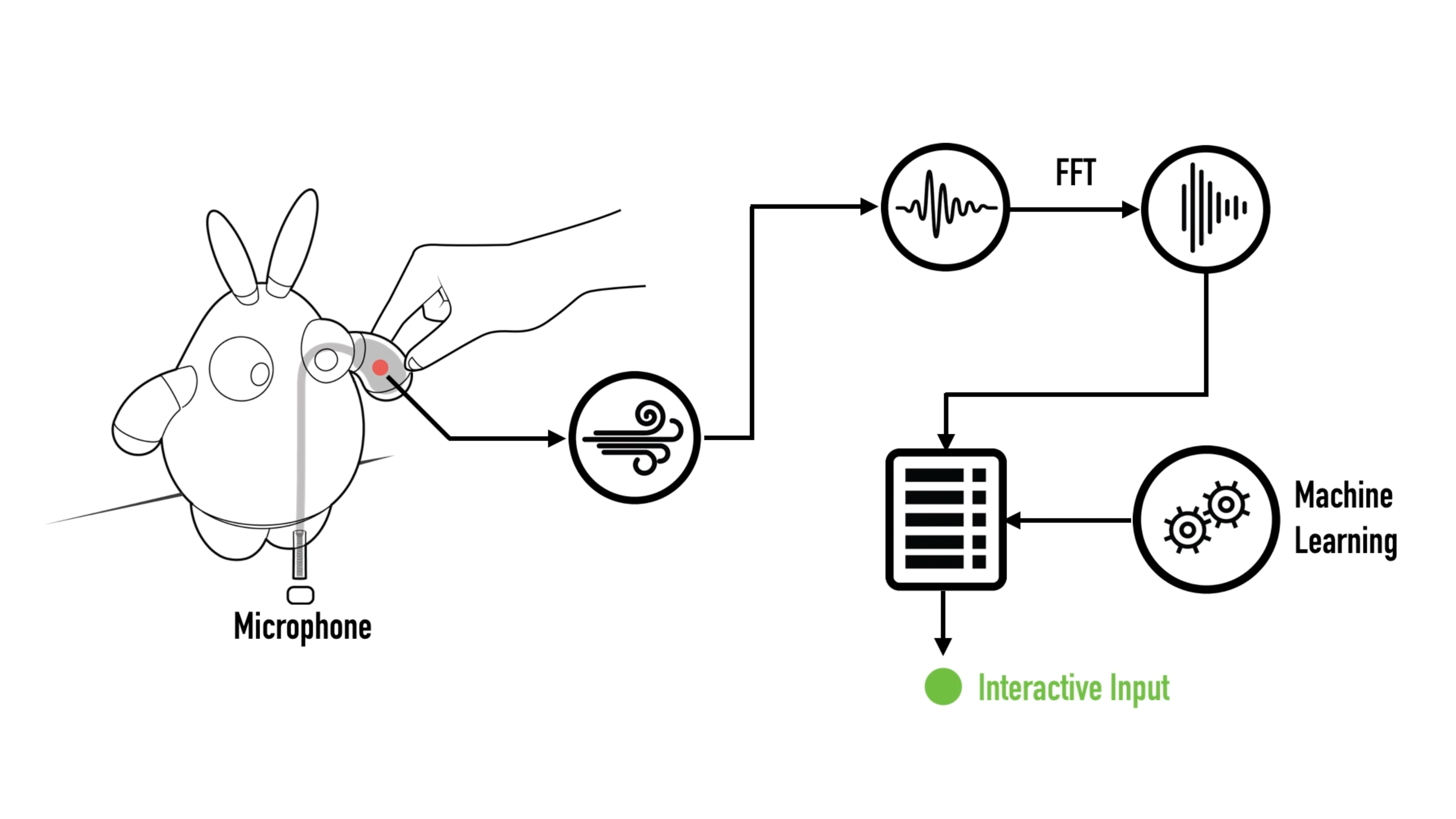

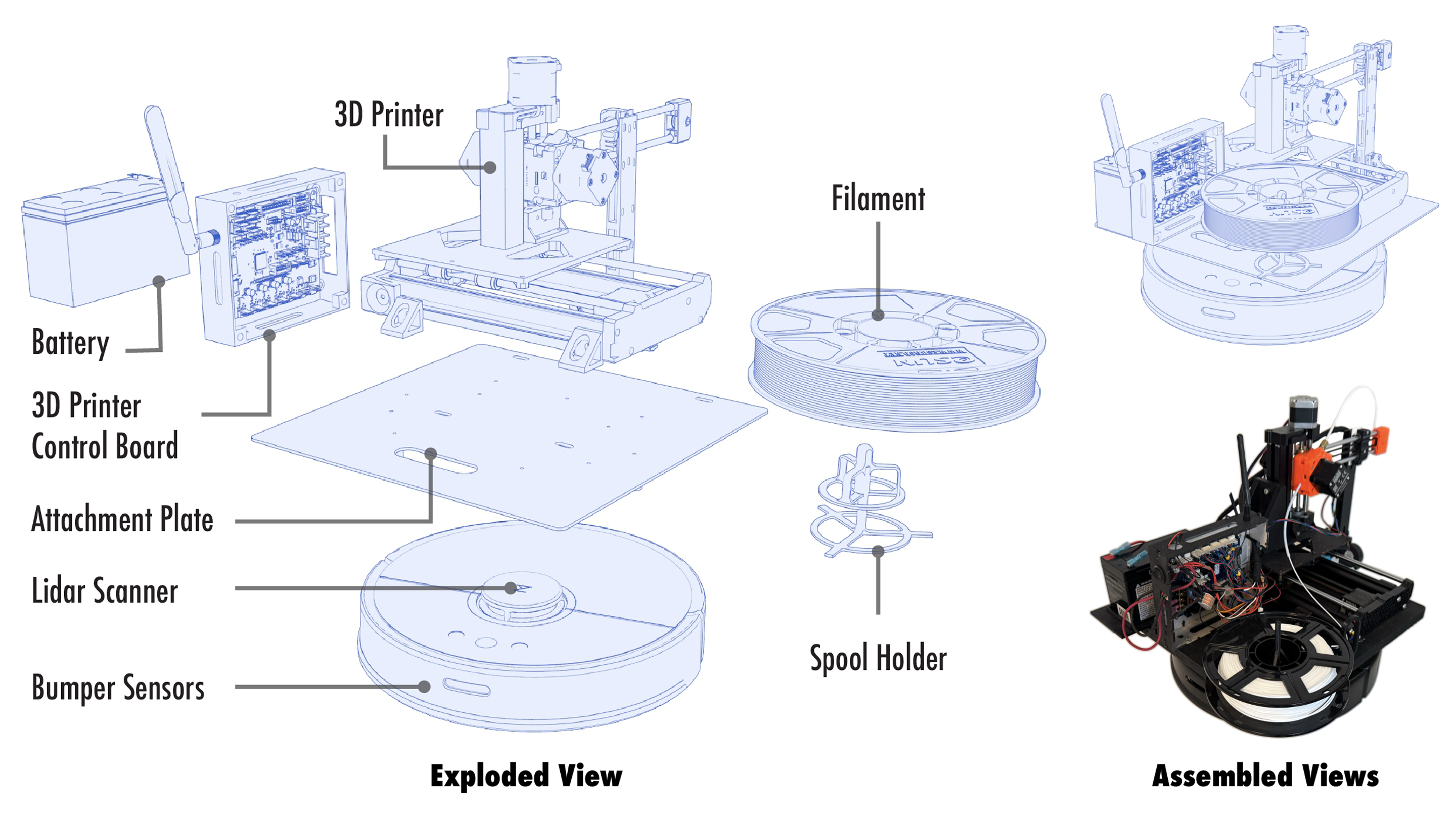

#3 Intelligent Physical Agents for Human Body-Centered and Environmental-Scale Interfacing and Interaction

We possess an ever-present interface—the human body—and continually interact with the dynamically changing environment. I envision a future of making in which individual users can create and customize intelligent agents that combine computational capabilities (e.g., interactivity and reasoning) with physical abilities (e.g., mobility and motor control). My research explores software and hardware approaches for constructing such intelligent agents, enabling body-centered and environmental-scale interfacing and interaction.

Example Publications

MakerWear: A Tangible Approach to Interactive Wearable Creation (CHI ’17) | Best Paper Award

Majeed Kazemitabaar, Jason McPeak, Alexander Jiao, Liang He, Thomas Outing, and Jon E. Froehlich

MobiPrint: A Mobile 3D Printer for Environment-Scale Design and Fabrication (UIST ’25)

Daniel Campos Zamora, Liang He, and Jon E. Froehlich

Recent Updates

•••01/2026:

Two papers conditionally accepted to CHI '26. Congrats, Ben, Hannah, and collaborators!

01/2026:

Invited to ASSETS '26 Program Committee.

01/2026:

SafetyBuilder accepted to IMWUT. Congrats, Jiawei and the team!

12/2025:

PufFab accepted to TEI '26 Work-in-Progresss. Congrats, Angel and the team!

11/2025:

Workshop on wearable for personalized health accepted to CHI '26.

10/2025:

FluxLab accepted to TEI '26. Congrats, Hannah and the team!

09/2025:

Organized the CTM workshop and chaired one talk session at UIST'25.

08/2025:

Two papers on digital fabrication accepted to SCF '25. See you in Boston.

08/2025:

Started the professorship at UTD CS with two PhD students joining my group.

06/2025:

A11yShape accepted to ASSETS '25. See you in Denver.

06/2025:

Started working at the Programmable Products Lab at Keio Univeristy as a visiting Associate Professor. Thank you, Koya Narumi-san.

05/2025:

Started working at HKUST (GZ) as a visiting scholar. Thank you, Dr. Xin Tong.

05/2025:

Invited to CHI '26 Program Subcommittee: Hardware, Materials, and Fabrication.

Advised Students

PhD Students

Hired Research Associates

Courses

Fall 2025 (Undergraduate Course)

CS 4352: Introduction to HCI

Spring 2026 (Graduate Course)

CS 6326: Human Computer Interactions

Outreach & Initiatives

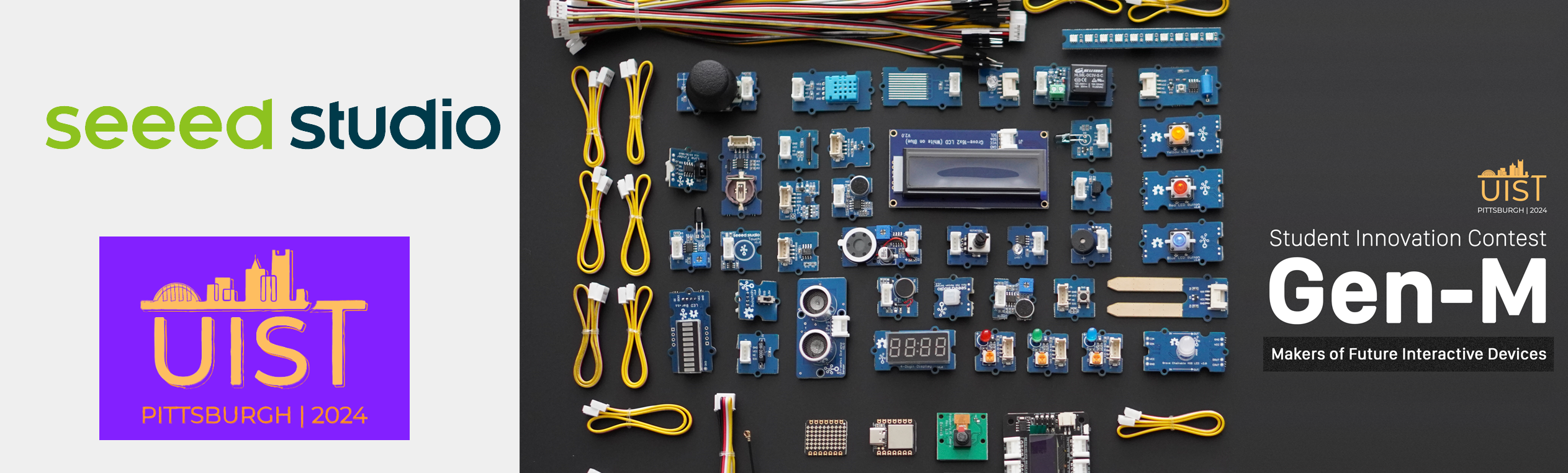

Selected and created a custom hardware kit—Gen-M Kit—that contains over 80 programmable modules provided by Seeed Studio and distributed the kits to eight student teams around the world.

Led and developed FabGalaxy—an integrated visualization tool for the MIT HCIE group's Personal Fabrication Research in HCI and Graphics